abhiraj-blog-3

🧠 Mac Mini for Local AI? Thought About It…#

Lately, I’ve seen a lot of people flexing their Mac Mini stacks for local AI/LLM workloads. Seemed cool, minimal, power-efficient. So I thought:

“Why not grab a second-hand Mac Mini and connect it to my homelab? Just for local AI stuff?”

Well… turns out there’s a catch.

🚧 The Problem with Cheap Mac Minis#

Most second-hand Mac Minis come with 8GB or 16GB of Unified Memory, and yeah, macOS can allocate up to 75% of that to GPU compute. But if you’re trying to run models like:

llama3:13bdeepseek-coder:6.7bphi3:14b

…it’s a bottleneck fast.

Unified RAM isn’t magic. You hit the wall real quick with anything beyond 7B models. That’s a dealbreaker if you’re aiming for serious offline AI.

💡 My DIY Setup: Ryzen + RTX = Win#

I looked into my existing rig and realized I’m not far off:

- 🧠 Ryzen 5 3600

- 🔧 Planning to add a used RTX 3060 12GB

That combo? Way more powerful and scalable than a Mac Mini.

With 12GB VRAM, I can comfortably run:

llama3:8bwith fast response timesphi3:14bfor long-form & document-based tasksqwen2.5-coder:7bfor coding assistant stuff

All locally. No API keys. No cloud spying. No monthly fees.

✅ Final Verdict#

| Option | Cost | Performance | Flexibility | Worth it? |

|---|---|---|---|---|

| Mac Mini (8–16GB) | 💰💰 | ❌ Lags on >7B | ❌ macOS limits | 🤷 Only if you’re rich |

| Ryzen + RTX 3060 12GB | 💰 | ✅ Smooth on 8B–14B | ✅ Full control | 💯 All day |

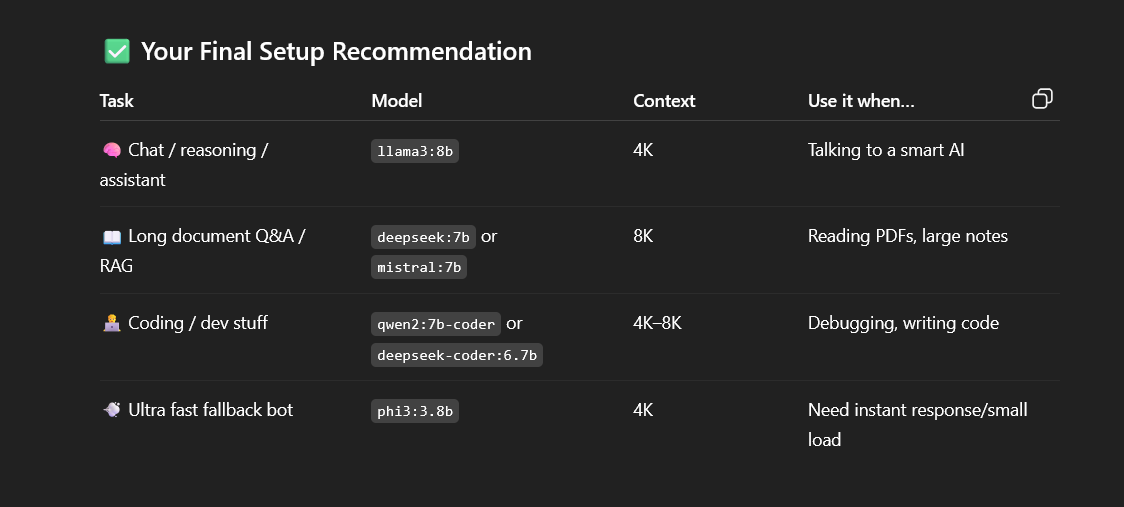

🧠 My LLM Loadout (Running on Ollama)#

Here’s what I’m planning to self-host:

llama3:8b-instruct– 💬 short chats, fast tasksphi3:14b– 📄 document processing, RAG, long-contextqwen2.5-coder:7b– 💻 dev assistant, code completion

Paired with Ollama and LM Studio, this is gonna replace Gemini, Claude, and DeepSeek for most use cases.

💬 Real Talk#

If you’re building a local AI setup, don’t get distracted by aesthetics.

Mac Mini is quiet and slick, but Ryzen + NVIDIA gives you raw power at a fraction of the cost.

Stay tuned for more self-hosted AI experiments. Peace ✌️